Hey everyone! ![]()

VTubing has gone through some crazy transformations over the years. From simple Live2D avatars to AI-driven 3D models, digital performers are getting more advanced than ever. Let’s take a look at how things have evolved!

The Evolution of VTubers

The Evolution of VTubers

Early Days: PNG & Live2D VTubers (2016-2019)

Early Days: PNG & Live2D VTubers (2016-2019)

![]() [Insert image of early VTubers like Kizuna AI, simple Live2D models, or PNGTubers]

[Insert image of early VTubers like Kizuna AI, simple Live2D models, or PNGTubers]

![]() VTubers started with 2D sprites or simple Live2D rigs that could blink and move slightly.

VTubers started with 2D sprites or simple Live2D rigs that could blink and move slightly.

![]() Expressions were limited, with most movement controlled by basic face-tracking or hotkeys.

Expressions were limited, with most movement controlled by basic face-tracking or hotkeys.

![]() Example: Kizuna AI led the charge, inspiring the VTubing boom.

Example: Kizuna AI led the charge, inspiring the VTubing boom.

3D VTubers & Motion Capture (2020-Present)

3D VTubers & Motion Capture (2020-Present)

![]() The jump to 3D avatars allowed for full-body motion tracking using VR setups.

The jump to 3D avatars allowed for full-body motion tracking using VR setups.

![]() More immersive experiences, like dancing, hand gestures, and even full concerts!

More immersive experiences, like dancing, hand gestures, and even full concerts! ![]()

![]() Tools like VRChat, VSeeFace, and Unreal Engine made high-quality 3D VTubing more accessible.

Tools like VRChat, VSeeFace, and Unreal Engine made high-quality 3D VTubing more accessible.

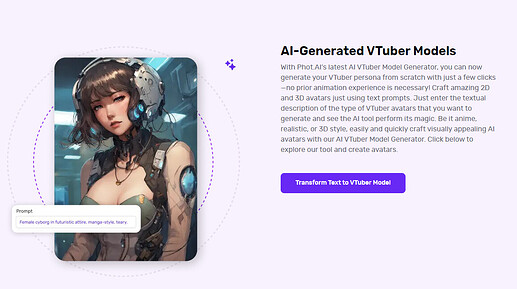

AI-Powered VTubers: The Next Generation (Now & Future)

AI-Powered VTubers: The Next Generation (Now & Future)

![]() AI is now enhancing VTubers with real-time facial tracking, AI-generated voices, and chatbots.

AI is now enhancing VTubers with real-time facial tracking, AI-generated voices, and chatbots. ![]()

![]() Some VTubers no longer need a human behind them—LLMs (like GPT-4), AI TTS (like ElevenLabs), and adaptive personalities make fully autonomous VTubers possible!

Some VTubers no longer need a human behind them—LLMs (like GPT-4), AI TTS (like ElevenLabs), and adaptive personalities make fully autonomous VTubers possible!

![]() Some fans are experimenting with AI VTubers that learn and evolve based on interactions.

Some fans are experimenting with AI VTubers that learn and evolve based on interactions.

What’s Next?

What’s Next?

With AI advancing so fast, could we see completely independent AI VTubers running their own channels? Or will human-controlled VTubers always have an edge?

What do you think? Do you prefer human-controlled VTubers, or are AI-driven VTubers the future?

To me, AI VTubers will definitely have their place—maybe as background streamers, interactive NPCs, or even virtual co-hosts. But human-controlled VTubers still offer something AI can’t replicate: authenticity, real emotions, and true creativity. Very similar and akin to how AI-generated texts is sometimes devoid of emotion and lack that human authenticity that we often criticize