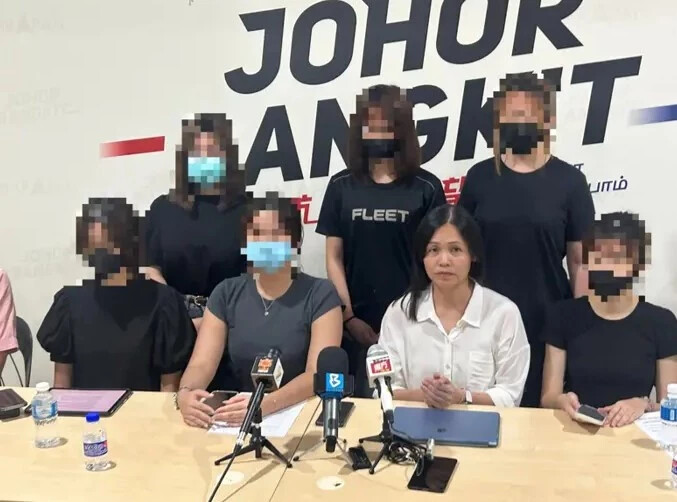

In a troubling sign of how fast AI abuse is outpacing protections, Malaysia is currently grappling with a serious deepfake scandal—one that has exposed the terrifying potential of synthetic media when used with malicious intent.

According to Deputy Communications Minister Teo Nie Ching, at least 38 individuals, including school students as young as 12, have fallen victim to AI-generated explicit content. The deepfakes involve the superimposition of these young victims’ faces onto pornographic imagery, without their knowledge or consent.

What’s even more alarming is the response—or lack thereof—from schools. Teo called out educational institutions for failing to take complaints seriously, urging them to strengthen their standard operating procedures (SOPs) to protect students from this form of digital exploitation. She emphasized that both public and private schools must act swiftly to prevent further harm, especially as the tools to create hyper-realistic AI-generated images become more accessible and sophisticated.

This case is not isolated. It’s a symptom of a broader, rapidly growing crisis surrounding deepfake technology and online safety. AI-generated explicit content is becoming easier to produce and distribute, often leaving victims—especially minors—without a clear path to justice or emotional recovery.

![]() What This Means:

What This Means:

- There’s a growing need for AI-specific legislation that protects minors and punishes those who exploit this tech for harm.

- Schools and institutions must adopt digital literacy and safety programs—not just for students, but for staff as well.

- Tech platforms need to be held accountable for the spread of deepfake content and invest in detection tools and takedown procedures.

future’s gonna be rough ![]()